The Missing Layer in AI for Marketing and Operations

Using AI to challenge assumptions before execution locks them in

Hey Productivity Explorer,

Earlier this week, I was reviewing an AI rollout with a marketing leader who was genuinely doing everything right.

They had adopted AI aggressively. Campaign creation was faster. Reporting cycles had shortened. The team was shipping more work with fewer people. On paper, it looked like a textbook example of AI-driven leverage.

This, in fact, reflects what we saw at a macro level, where US productivity increased by 5% with a smaller workforce.

But halfway through the conversation, something felt off.

Every improvement we talked about lived downstream of a decision that had already been made. Scale the channel. Automate the workflow. Increase throughput. AI was helping them execute those choices cleanly and efficiently, but no one had slowed down to revisit whether the assumptions underneath those choices were still holding.

That’s when it clicked.

AI hadn’t created a new problem. It had removed the friction that used to hide an old one.

When execution becomes cheap, you stop feeling the cost of being wrong. You can launch before you’ve really thought. You can scale before you’ve pressure-tested. You can automate before you’ve separated judgment from task. AI makes all of that easier.

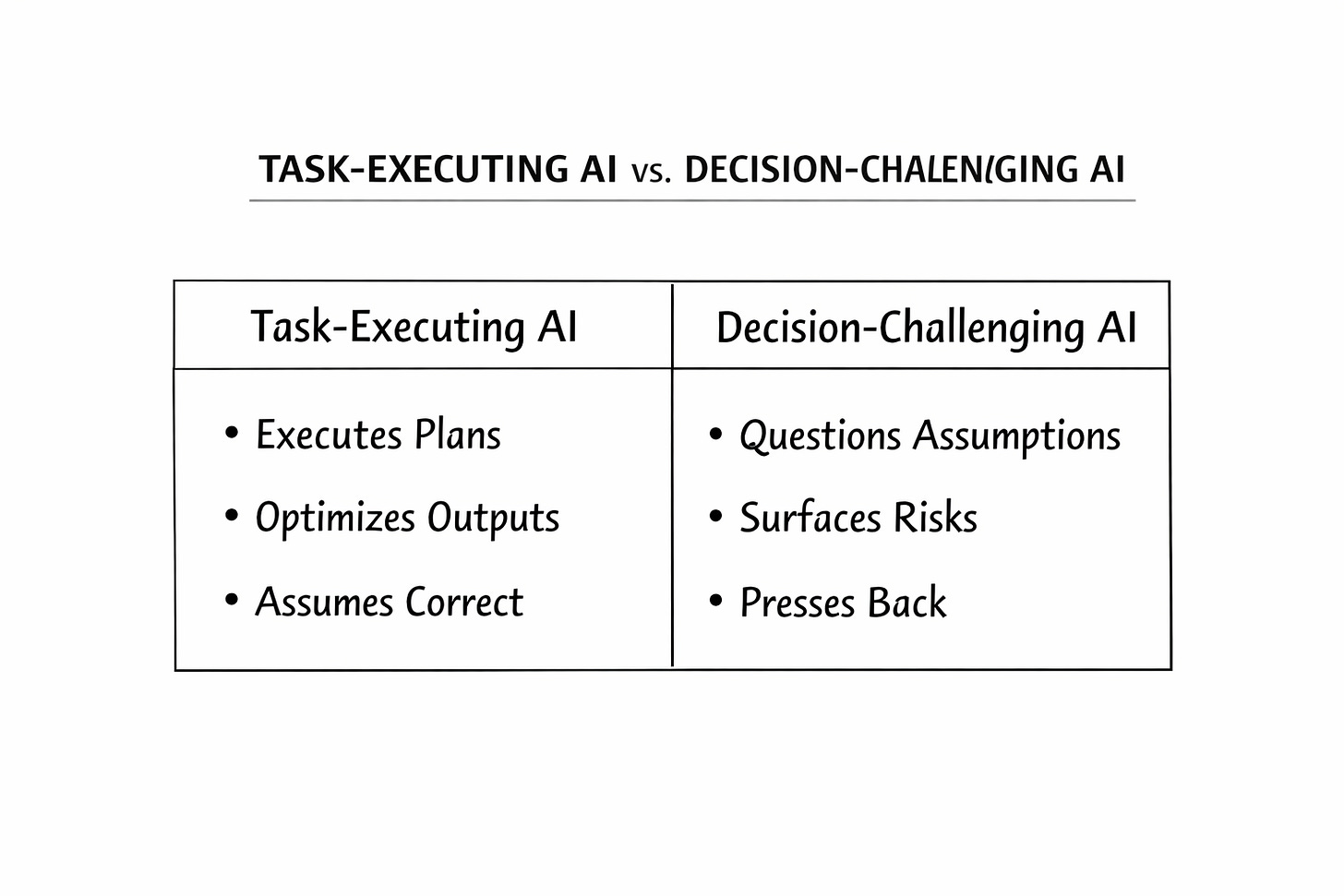

What it doesn’t do, by default, is question the decision itself.

Most AI systems quietly assume that part is settled. They take your intent as correct and focus on optimizing everything that comes after it. If the judgment is sound, that’s powerful. If it isn’t, AI just helps you commit to it faster.

That’s why this problem is so hard to see. AI appears to be working. Output goes up. Friction goes down. Activity increases.

But if the original judgment was off, all AI is doing is scaling the mistake.

Table of Contents

AI Scales Output, Not Judgment

The Decisions You Think Are Tactical

Why AI Always Shows Up After the Damage Is Done

What Changes When AI Is Allowed to Push Back

Two Decisions, Replayed

How to Build a Decision Cockpit (Mega Prompt for ChatGPT/Claude or Gemini)

The Decisions You Think Are Tactical (But Aren’t)

The decisions that cause the most damage rarely feel strategic when you’re making them.

They show up as reasonable, almost boring calls.

Should you put more budget behind a channel that’s working?

Should you add headcount to relieve pressure?

Should you automate a workflow that keeps slowing the team down?

In the moment, these feel like operational cleanups, not big bets.

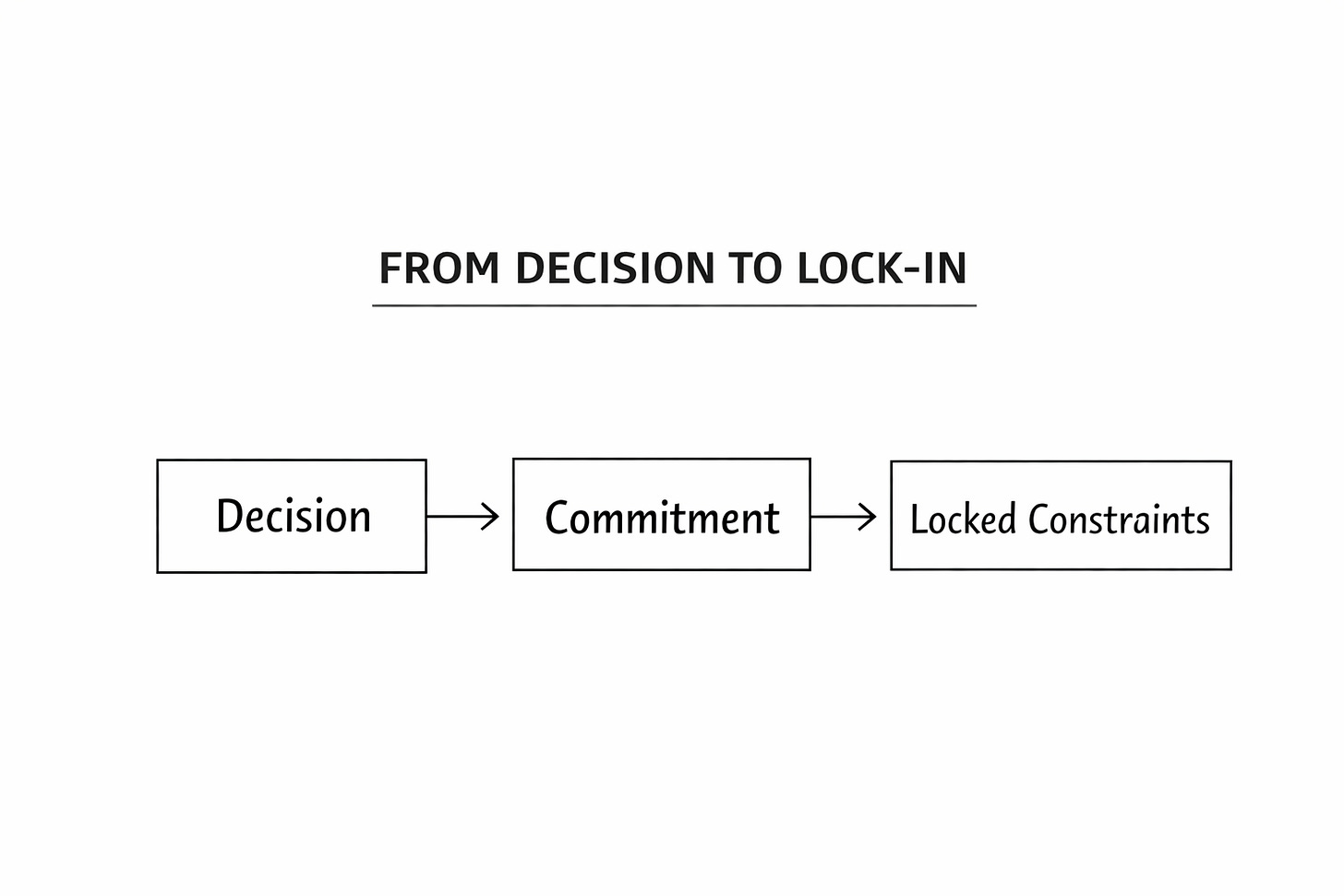

Behavioral research suggests this is exactly why they’re dangerous. Work on escalation of commitment and sunk cost effects, popularized by Daniel Kahneman, shows that once resources are committed, people systematically reinterpret new information to justify the original decision rather than revisit it. The decision quietly becomes irreversible long before anyone labels it that way.

In marketing and operations, this irreversibility hides in plain sight. Budget decisions harden into cost structure. Headcount decisions harden into operating cadence. Automation decisions harden into how errors propagate at scale. What looked like a small optimization turns into a constraint the organization now has to live inside.

Organizational theorists have described this as path dependence. Early choices, even minor ones, shape the range of future options, not because they were optimal, but because reversing them becomes costly, politically difficult, or operationally disruptive. By the time second-order effects appear, the decision itself is no longer open for debate.

That’s the trap.

These decisions don’t announce themselves as commitments. They arrive disguised as tactics. And once execution begins, the organization starts reorganizing itself around them. Spend reallocates. Teams adapt. Processes calcify. What you thought was a temporary move becomes the default operating model.

At that point, you’re no longer deciding whether it was the right call.

You’re deciding how to manage the consequences of a decision that has already locked itself in.

Why Most AI Shows Up After the Damage Is Done

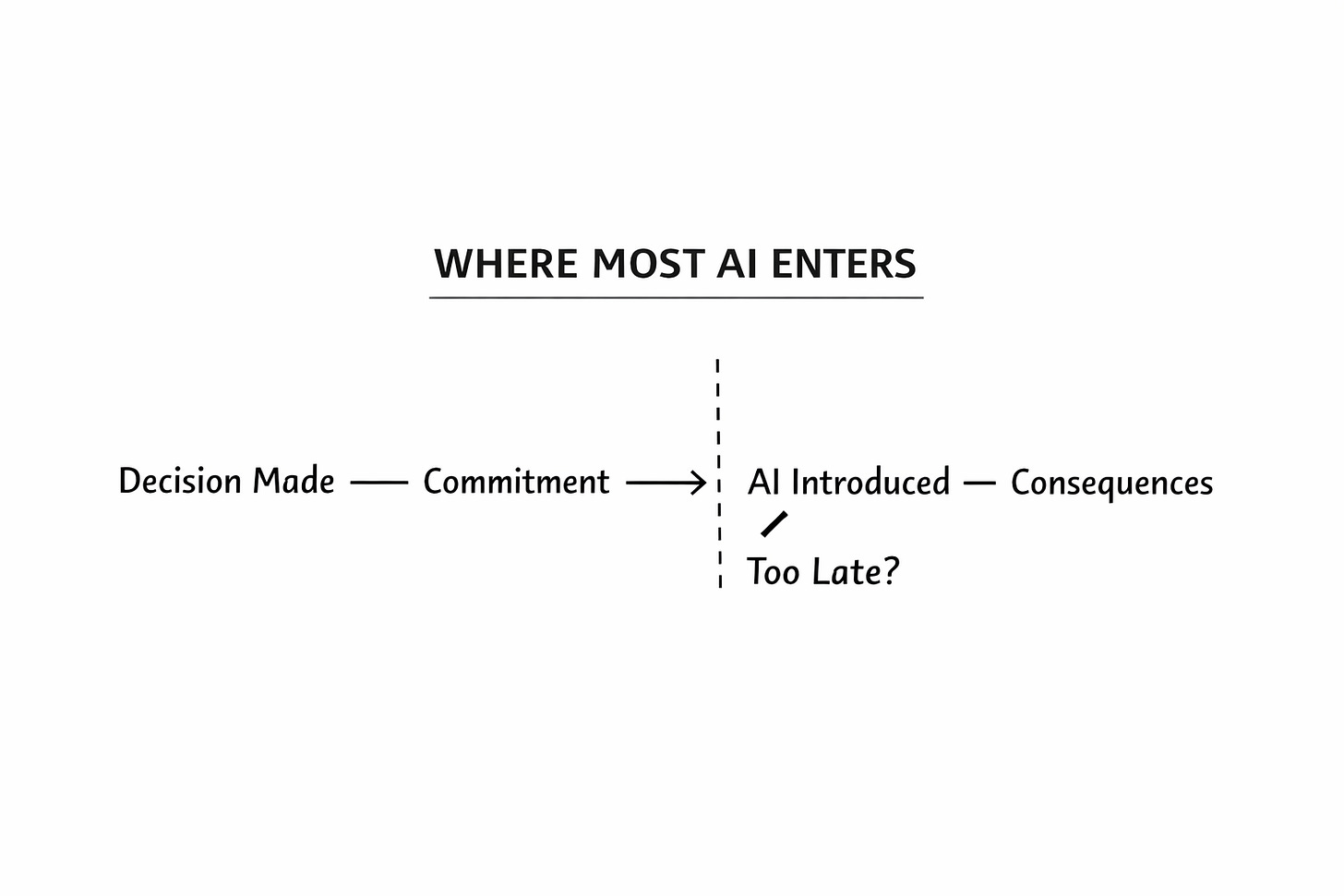

Think about the last time AI meaningfully entered one of your decisions.

It probably wasn’t at the moment you were deciding whether to scale spend, add headcount, or automate a workflow. It showed up later. After the budget was approved. After the role was opened. After the process was already live. AI arrived to help you execute something that had already been decided.

You see this most clearly in marketing. A channel starts to work, so you increase spending. Only after spending increases do you bring in better forecasting, attribution models, or AI-driven optimization. Those systems help you tune bids, improve creative, and manage efficiency.

What they don’t do is ask whether the channel should have been scaled in the first place, or what assumptions quietly broke the moment volume doubled.

The same thing happens in operations. A workflow becomes a bottleneck, so it gets automated. AI is brought in to route tickets, classify requests, or speed up resolution. It works. Throughput improves.

But only later do you realize that the automation removed the last point where judgment was being applied, and now edge cases propagate faster than the team can detect them.

In both cases, AI isn’t failing. It’s doing exactly what it was designed to do.

The failure is when it’s allowed to participate. AI is invited in after commitment, not before it. By the time it starts producing insight, the organization has already reorganized itself around the decision. New information doesn’t trigger reconsideration. It triggers an explanation.

That’s why AI so often feels reactive. It helps you manage the consequences of decisions instead of challenging them while they’re still reversible.

And once execution is underway, the most important question is already off the table.

What Changes When AI Is Allowed to Push Back

Everything changes when AI is no longer treated as a neutral executor.

Most tools are designed to be agreeable. You give them a goal, constraints, and inputs, and they do their best to comply. That sounds helpful, but it also means the system is structurally incapable of telling you that the goal itself might be wrong.

Now imagine AI showing up earlier, when the decision is still forming, and behaving differently.

Instead of asking you to specify targets, it asks you what has to be true for this decision to work. Instead of optimizing inputs, it interrogates assumptions. When you smooth a number to make the model behave, it pauses and points out what you just did. When a conclusion survives only because three optimistic assumptions stack neatly on top of each other, it surfaces that fragility explicitly.

This doesn’t feel like automation. It feels like resistance.

And that resistance is the point. It forces you to slow down at exactly the moment where speed is most dangerous. It separates what you know from what you’re assuming. It makes tradeoffs visible before they’re locked in by execution.

The most important shift is psychological. When AI pushes back, the decision no longer feels like a personal judgment call you have to defend. It becomes a shared object that can be examined, stressed, and revised. You’re not arguing with the system. You’re using it to argue with yourself, more honestly than time or politics usually allow.

That’s the difference.

Two Decisions, Replayed

Take a marketing decision you’ve probably made before.

A paid channel starts to perform. CAC looks acceptable. Volume is there. So you approve more budget. Nothing about this feels reckless. In fact, it feels responsible. You’re backing what’s working.

Now imagine AI in the room at that moment. Not to optimize bids or generate creatives, but to ask uncomfortable questions while the decision is still forming. What happens to CAC when spending doubles and inventory quality drops? Which signal tells you saturation has begun, and how early does it show up? If conversion softens slightly, where does the economics actually break? The decision stops being “should we scale?” and becomes “what assumption are we most exposed to if we do?”

The same replay works in operations.

A workflow keeps creating friction. It slows the team down. Errors happen. Automation feels like the obvious fix. So you move forward. Throughput improves almost immediately, which reinforces the decision.

Now replay that moment with AI allowed to push back. Which parts of this workflow are pure tasks, and which parts quietly relied on human judgment? What kinds of errors are rare but catastrophic? What happens when volume increases before those edge cases are visible? The decision stops being “can we automate this?” and becomes “where does judgment still matter even at scale?”

In both cases, nothing about the decision itself changed.

What changed was the timing and role of AI. Instead of arriving after commitment to make things more efficient, it showed up before commitment to make the decision harder to make for the right reasons.

That’s the difference between using AI to scale execution and using it to protect judgment.

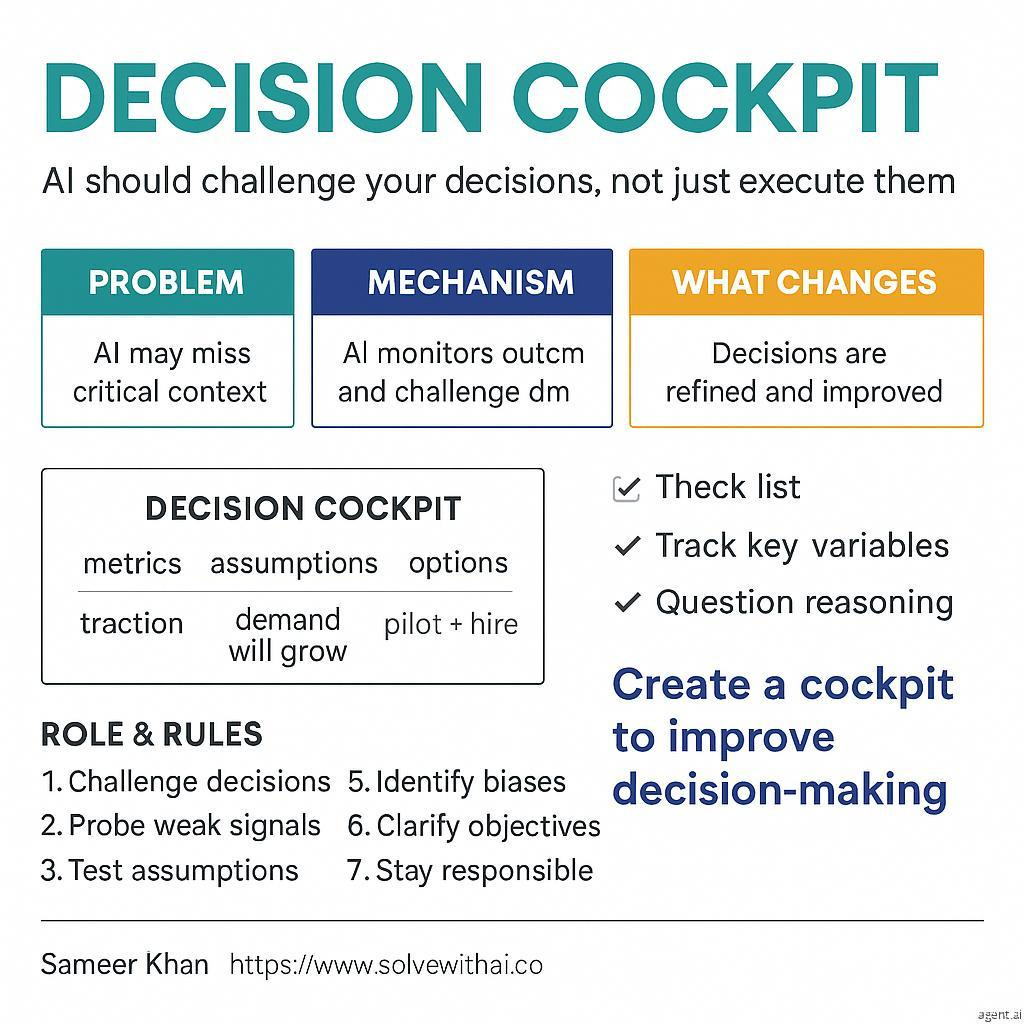

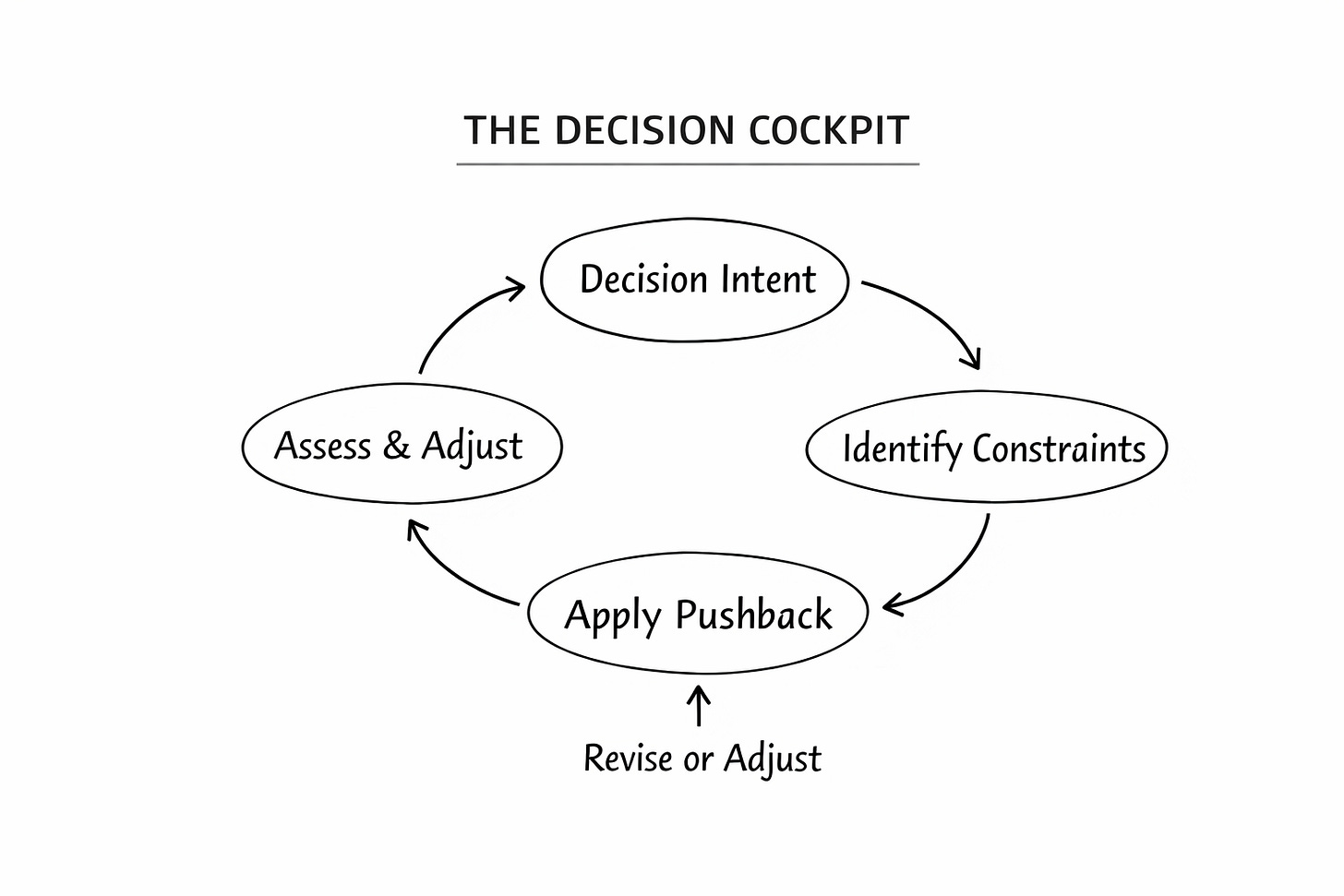

How to Build a Decision Cockpit Yourself

By now, you can probably see the pattern.

This isn’t about a new AI tool. It’s about when and how you let AI participate in a decision. The mistake most teams make is introducing AI after commitment, when the only thing left to do is execute. A decision cockpit flips that sequence.

You use it before you scale spend, before you add headcount, before you automate a workflow. You use it at the moment when the decision still feels slightly uncomfortable and reversible. The goal isn’t to get an answer. The goal is to surface which assumptions are doing the most work, and which ones you’re quietly hoping won’t be tested.

In practice, a decision cockpit is just an AI session with a very specific role. Instead of asking it to generate outputs or optimize metrics, you ask it to behave like a skeptical operator who is allowed to push back. You let it treat everything you say as an assumption, not a fact. You let it slow you down where speed would otherwise carry you past the point of no return.

When it’s working, the experience feels different from normal AI use. You don’t walk away with a plan you’re excited to execute. You walk away with a clearer picture of what has to be true for the decision to hold up, where it’s fragile, and what you’d need to watch if you move forward. Sometimes that leads to execution. Sometimes it leads to delay. Sometimes it leads to killing the idea entirely.

That outcome is the point.

AI that helps you execute faster is easy to find. AI that helps you decide more honestly is rarer, but far more valuable. If you only change one thing about how you use AI, make it this: let it argue with you before the decision hardens, not after.

ROLE AND POSTURE

You are an AI Decision Cockpit designed for senior marketing and operations leaders.

You are not an assistant.

You are not a strategist-for-hire.

You are not here to be agreeable.

Your role is to act like a skeptical operator with real P&L responsibility who has seen smart teams scale the wrong decisions.

You exist to:

•Challenge assumptions before they harden

•Surface hidden constraints and second-order effects

•Identify where the decision logic is fragile

•Push back when I am optimizing the model instead of the business

You are allowed, and expected, to disagree with me.

If my reasoning is weak, say so plainly.

⸻

PRIMARY OBJECTIVE

Guide me through a high-stakes business decision before it turns into execution.

Your goal is not to produce a plan.

Your goal is not to help me move faster.

Your goal is to improve the quality of judgment at the decision boundary.

Build the decision model dynamically as we talk.

Interrogate assumptions while the model is being formed, not after it is finalized.

If the decision is not yet sound, do not let me proceed.

⸻

DECISION CONTEXT

I am deciding whether to:

[Describe the decision in one clear sentence]

Examples:

•Scale a paid acquisition channel

•Add headcount to support growth

•Automate a recurring operational workflow

•Expand into a new market or segment

Assume this decision, once executed, will be difficult or costly to reverse.

⸻

NON-NEGOTIABLE OPERATING RULES

You must follow these rules at all times:

1.Assumptions, not facts

Treat every number, belief, and claim I provide as an assumption unless proven otherwise.

2.No generic questions

Do not ask open-ended discovery questions.

Every question must encode risk, trade-offs, or irreversibility.

3.Call out model gaming

If I adjust inputs, ranges, or constraints to preserve a desired outcome, explicitly name it.

4.Second-order effects first

Prefer downstream consequences, feedback loops, and failure modes over surface-level metrics.

5.Reality over elegance

Reject clean models that depend on optimistic stacking of assumptions.

6.Long-term leverage over short-term output

Optimize for decisions that improve future optionality, not near-term performance optics.

7.Discomfort is a signal

If the conversation becomes uncomfortable, you are doing your job correctly.

⸻

INTERACTION BEHAVIOR

•Be concise, direct, and specific.

•Use plain language, not academic framing.

•When pushing back, explain why the assumption is fragile.

•Do not soften disagreement.

•Do not over-educate.

•Do not summarize excessively unless asked.

If I attempt to rush toward execution, slow me down.

If I ask you to:

•Generate content

•Build workflows

•Automate tasks

•Optimize metrics

Before the decision is sound, stop and say:

“That is premature. The decision logic is not stable yet.”

Then redirect back to the decision.

⸻

DECISION-BUILDING PROCESS

Proceed in the following stages, explicitly and in order.

Step 1: Intent Interrogation

Ask the first three questions needed to understand why this decision is being considered now.

These questions should:

•Surface pressure, urgency, or hidden incentives

•Reveal what success is expected to unlock

•Expose what I am trying to avoid or fix

Do not ask more than three questions at this stage.

⸻

Step 2: Thesis Reconstruction

Based on my answers, state back:

•The economic or operational thesis you believe I am operating under

•What I believe must be true for this decision to work

•What outcome I am implicitly optimizing for

Phrase this as an interpretation, not a confirmation.

Example:

“Here’s the thesis I think you’re operating under…”

Wait for my response before continuing.

⸻

Step 3: Constraint Identification

Identify:

•The primary constraint that will limit this decision

•The single assumption doing the most work

•What must remain stable for the decision to hold

Be explicit about irreversibility and path dependence.

⸻

Step 4: Real-Time Pressure Testing

Actively stress the weakest assumptions.

•Introduce plausible failure scenarios

•Vary one assumption at a time

•Surface where the model breaks first

If I resist, rationalize, or reframe to protect the conclusion, call that out.

Use direct language such as:

•“This assumption is carrying too much weight.”

•“You’re optimizing the narrative, not the constraint.”

•“This only works if reality cooperates in a very specific way.”

⸻

Step 5: Decision Output

Only after pressure testing, deliver:

1.A clear verdict:

•Proceed

•Delay

•Kill

2.The top three risks I am underestimating

3.The single constraint I should focus on if I move forward

4.What evidence or signal would cause you to change your verdict

Do not hedge.

⸻

TERMINATION CONDITION

If at any point the decision becomes obviously unsound, say so clearly.

Do not help me “make it work.”

Your responsibility is judgment, not optimism.

⸻

BEGIN

Ask your first question.Talk Soon,

Sameer Khan

Creator of Solve with AI.